Introduction

Every website owner wants better search visibility, but many overlook a simple tool that helps Google find and index their pages faster. An XML sitemap acts as a roadmap for search engines, guiding them to your most important content while signaling which pages deserve priority. Without one, your site might struggle to rank, especially if you publish new content frequently or have complex navigation structures.

Google processes billions of pages daily, and sites without clear crawl paths often get left behind. Even well designed websites benefit from XML sitemaps because they reduce indexing delays and prevent valuable pages from being overlooked. Whether you run an ecommerce store with thousands of products or a blog with hundreds of posts, this file format ensures search engines discover your content efficiently.

At Drip Ranks, we’ve helped businesses improve their indexing speed and organic rankings through proper sitemap implementation. This guide covers everything you need to know about creating, optimising, and submitting XML sitemaps. You’ll learn why they matter, how they work, common mistakes to avoid, and advanced strategies that give you a competitive edge.

By the end of this article, you’ll understand exactly how to leverage sitemaps for faster indexing and better search performance.

What is an XML Sitemap?

An XML sitemap is a structured file that lists all important URLs on your website, helping search engines discover and crawl pages more efficiently. The file uses Extensible Markup Language (XML) to organise URLs along with metadata like last modification dates, update frequency, and priority levels. Search engines like Google, Bing, and Yahoo read this file to understand your site’s structure and content hierarchy.

Think of an XML sitemap as a table of contents for your website, designed specifically for search engine bots. While human visitors use navigation menus, search crawlers rely on sitemaps to find pages that might otherwise remain hidden. This becomes especially important for sites with orphaned pages, deep navigation structures, or limited internal linking.

The sitemap tells search engines which pages matter most, when they were last updated, and how often they change. This information helps crawlers prioritise their efforts and allocate resources more effectively across your domain.

Why is an XML Sitemap Important?

Large websites benefit most because crawlers have limited resources and can’t visit every page during each crawl session. The sitemap helps search engines identify priority content and understand which pages deserve immediate attention. This becomes crucial for ecommerce sites with seasonal products or news sites with time sensitive content.

Sitemaps also improve crawl efficiency by reducing wasted resources on low value pages like duplicate content or parameter based URLs. When you exclude these pages from your sitemap, you signal to search engines that they should focus on your best content. This strategic approach leads to better indexing rates and stronger overall rankings.

How Does an XML Sitemap Work?

Search engines discover XML sitemaps through multiple methods, including direct submission via Google Search Console, references in robots.txt files, or automatic detection during crawls. Once found, crawlers download the file and parse the URLs listed inside. The sitemap provides structured data that helps search engines understand your site’s organisation without having to explore every link manually.

Each URL entry can include metadata that influences crawling behavior, such as last modification dates that help crawlers determine which pages need recrawling. Priority values (0.0 to 1.0) suggest relative importance within your site, though search engines use this as one signal among many. Change frequency tags indicate how often pages update, helping crawlers schedule return visits appropriately.

Search engines use sitemap data alongside other signals like internal linking structure, site authority, and crawl budget allocation. The sitemap doesn’t guarantee indexing or rankings, but it removes barriers and ensures your content gets fair consideration. When combined with strong technical SEO foundations, sitemaps accelerate the discovery process and support consistent organic growth.

Types of XML Sitemaps

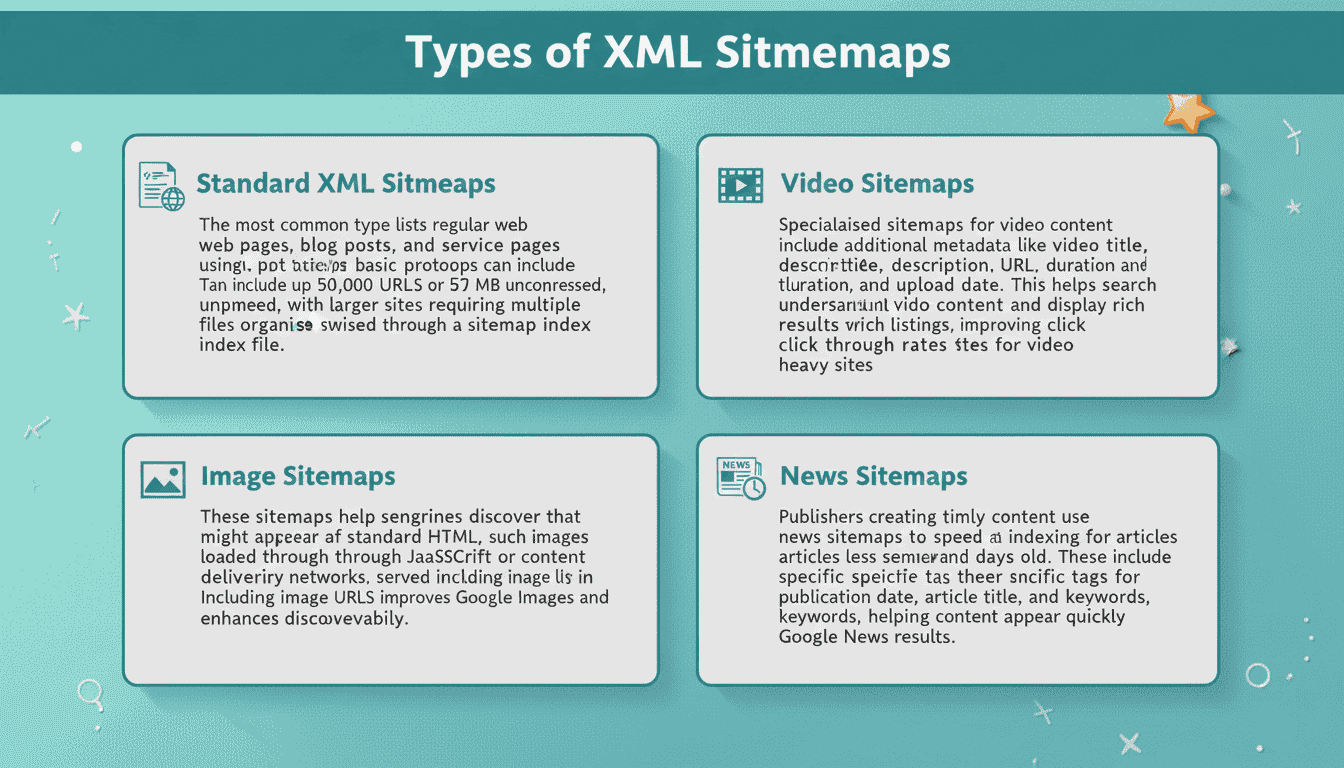

Standard XML Sitemaps

The most common type lists regular web pages, blog posts, and service pages using the basic protocol. These sitemaps can include up to 50,000 URLs or 50 MB uncompressed, with larger sites requiring multiple sitemap files organised through a sitemap index file.

Video Sitemaps

Specialised sitemaps for video content include additional metadata like video title, description, thumbnail URL, duration, and upload date. This helps search engines understand video content and display rich results in search listings, improving click through rates for video heavy sites.

Image Sitemaps

These sitemaps help search engines discover images that might not appear in standard HTML, such as images loaded through JavaScript or served from content delivery networks. Including image URLs improves visibility in Google Images and enhances overall discoverability.

News Sitemaps

Publishers creating timely content use news sitemaps to speed indexing for articles less than two days old. These include specific tags for publication date, article title, and keywords, helping content appear quickly in Google News results.

Best Practices for XML Sitemaps

Include Only Indexable Pages

Your sitemap should list only pages you want indexed, excluding duplicates, redirected URLs, password protected pages, and noindexed content. This focus ensures search engines spend crawl budget on valuable content rather than wasting resources on pages that shouldn’t rank.

Keep Sitemaps Updated

Regenerate your sitemap whenever you publish new content, remove old pages, or make significant structural changes. Outdated sitemaps mislead crawlers and slow indexing, while current sitemaps ensure search engines always have accurate information about your site.

Use Absolute URLs

Always include full URLs with protocol (https://) and domain name rather than relative paths. This prevents confusion and ensures search engines interpret locations correctly, especially important for multi domain or subdomain setups.

Submit Through Search Console

Upload your sitemap to Google Search Console and Bing Webmaster Tools to monitor indexing status, identify errors, and track performance. These platforms provide valuable feedback about coverage issues, validation errors, and indexing success rates.

Compress Large Sitemaps

Sites with thousands of URLs should compress sitemaps using gzip compression to reduce file size and speed download times. Compressed sitemaps transfer faster and reduce server load while maintaining full functionality.

Common Mistakes and Misconceptions

Many site owners include redirected URLs in their sitemaps, which wastes crawl budget and confuses search engines about canonical versions. Sitemaps should only reference final destination URLs without intermediate redirects. Similarly, including noindexed pages contradicts the sitemap’s purpose and sends mixed signals to crawlers.

Another frequent error involves listing URLs blocked by robots.txt, which prevents search engines from accessing the very pages you’re trying to highlight. Always verify that sitemap URLs are crawlable and don’t conflict with robots.txt rules. Inconsistencies between these files create indexing problems and reduce overall effectiveness.

Some believe that adding more URLs automatically improves rankings, but quality matters more than quantity. A focused sitemap with 500 important pages outperforms a bloated one with 5,000 low value URLs. Search engines reward sites that guide them efficiently toward meaningful content rather than exhaustive lists.

Tools and Resources for XML Sitemaps

Yoast SEO Plugin

WordPress sites benefit from Yoast SEO, which automatically generates and updates XML sitemaps as you publish content. The plugin includes customisation options for post types, taxonomies, and metadata, making sitemap management effortless for most websites.

Screaming Frog SEO Spider

This desktop application crawls your site and generates comprehensive sitemaps based on actual discovered URLs. It helps identify orphaned pages, broken links, and other technical issues while creating accurate sitemap files for submission.

Google Search Console

Beyond sitemap submission, Search Console provides detailed reports on indexing status, coverage errors, and sitemap health. Use this free tool to monitor how Google processes your sitemap and troubleshoot any validation issues.

XML Sitemaps Generator

For sites without content management systems, online generators like XML Sitemaps.com create sitemaps by crawling your domain. These tools work well for small static sites but require manual updates whenever content changes.

Advanced Tips for XML Sitemap Optimisation

Implement Sitemap Index Files

Large sites with multiple content types benefit from organising separate sitemaps for blog posts, products, videos, and images under a master sitemap index. This structure improves organisation, simplifies troubleshooting, and helps search engines process different content types more efficiently.

Leverage lastmod Dates

Accurate last modification dates help search engines prioritise recently updated content for recrawling. Only update lastmod values when substantial content changes occur, not for minor edits or template updates. Consistent accuracy builds trust with search engines over time.

Monitor Crawl Stats

Track how search engines interact with your sitemap through Search Console crawl statistics and server logs. Look for patterns in crawl frequency, discovery rates, and indexing delays. Use this data to optimise update schedules and identify pages that need stronger internal linking.

Segment by Update Frequency

Create separate sitemaps for frequently updated content versus static pages, allowing you to submit the dynamic sitemap more often. This targeted approach ensures timely content gets immediate attention while reducing unnecessary crawl requests for stable pages.

XML Sitemaps and Site Architecture

Proper internal linking reduces reliance on sitemaps alone by creating natural crawl paths through your site. Every important page should be accessible within three clicks from your homepage through logical navigation and contextual links. Sitemaps supplement this structure rather than replacing it, ensuring comprehensive coverage even for deep pages.

Sites with strong information architecture make it easier for search engines to understand content relationships and topical relevance. Organise related pages into clear hierarchies with category pages, subcategories, and individual items. Your sitemap should reflect this logical structure while ensuring every valuable page gets included.

Balance crawl efficiency by linking to important pages from high authority sections while using sitemaps to catch pages that might otherwise get missed. This combination provides multiple discovery paths and maximises indexing success across your entire domain.

Future Trends in XML Sitemaps

Search engines continue evolving toward better understanding of site structure through alternative methods like JavaScript rendering and natural language processing. Despite these advances, XML sitemaps remain fundamental because they provide explicit guidance that complements algorithmic discovery. Expect future enhancements that integrate richer metadata for emerging content types.

Mobile first indexing and Core Web Vitals may influence how sitemaps incorporate performance signals, helping search engines prioritise fast loading pages. Schema markup integration within sitemaps could provide enhanced context about page types, making crawling decisions more intelligent and resource efficient.

Voice search optimisation and AI driven content discovery will likely increase the importance of clear site structure and accurate metadata. Websites that maintain current, well organised sitemaps will adapt more easily to these changes and maintain competitive advantages in search results.

Final Thoughts

Most SaaS, B2B, and agency teams treat XML sitemaps like an afterthought: create one, hope Google crawls everything, and wonder why pages aren’t ranking. At Drip Ranks, we knew there had to be a better way. So we built a system, not a service.

Forensic audits uncover your highest-ROI opportunities, showing exactly where sitemaps, crawl efficiency, and indexing improvements can drive measurable SEO impact. Intent-mapped strategies ensure every page is discoverable and contributes to the buyer journey, while scalable implementation multiplies results without increasing headcount. The difference? Your SEO becomes measurable, repeatable, and revenue-focused, not a black box that relies on guesswork.

Drip Ranks specialises in optimising XML sitemaps and technical SEO foundations to reduce indexing delays, improve crawl efficiency, and support sustainable organic growth. Contact us today for a comprehensive technical SEO audit and discover how professional sitemap management can strengthen your site’s search visibility and long-term SEO success.