Google’s bots visit millions of websites daily, but they don’t have unlimited time for your site. If you’re managing a growing website and noticing slow indexing or missed pages, you’re likely facing a crawl budget problem that’s costing you traffic and revenue.

Most website owners don’t realise that Google assigns a limited number of page visits to each domain based on authority, server health, and content quality. When your crawl budget gets wasted on low value pages, your most important content sits unindexed while competitors capture rankings you deserve.

This becomes especially critical for sites with thousands of URLs, ecommerce platforms with product variations, or content hubs publishing frequent updates. Every wasted crawl on a duplicate page or broken link is a missed opportunity for your cornerstone content to reach search results.

This guide reveals exactly how crawl budget works in 2026, why it matters for your rankings, and proven strategies to optimise every single bot visit. You’ll discover actionable techniques that enterprise SEOs use to maximise indexing efficiency and accelerate visibility for revenue generating pages.

What is Crawl Budget?

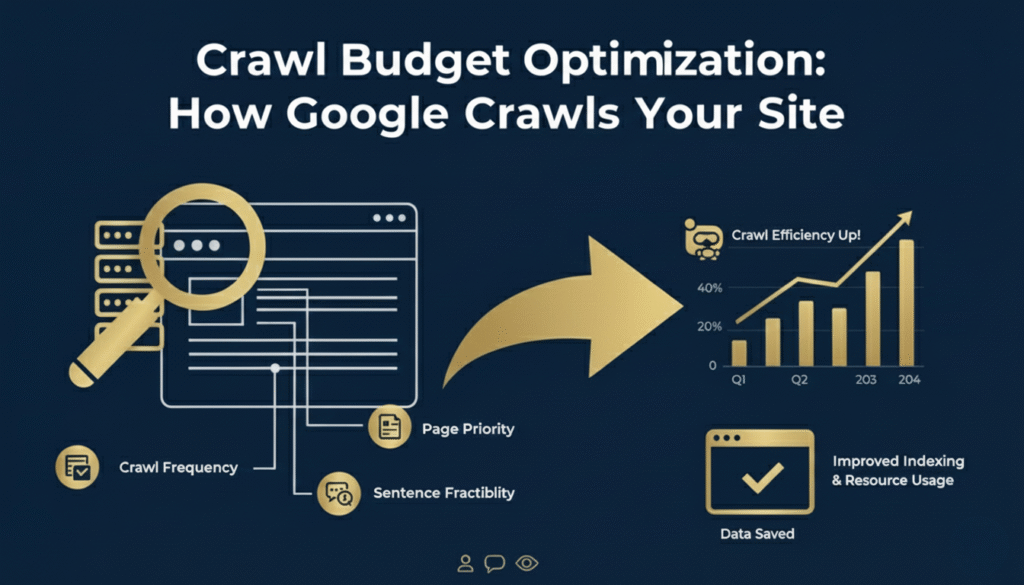

Crawl budget refers to the number of pages Google’s bots will crawl on your website within a specific timeframe. Google allocates this resource based on your site’s crawl demand and crawl capacity, creating a practical limit on indexing opportunities.

Think of it like a daily visitor allowance. If Google gives your site 500 crawls per day but you have 10,000 pages, it would take 20 days to crawl everything once, assuming perfect distribution. This delay directly impacts how quickly new content appears in search results.

The concept matters most for larger websites where inefficient crawling creates indexing gaps. A news site publishing 50 articles daily needs aggressive optimisation to ensure fresh content gets discovered within hours, not weeks after publication.

Why is Crawl Budget Important?

Crawl budget optimisation directly influences your site’s indexing speed and search visibility. When Google crawls your most valuable pages frequently, algorithm updates and content improvements reflect faster in rankings, giving you competitive advantages during crucial seasonal periods.

Poor crawl budget management wastes server resources on pages that generate zero organic value. Duplicate product pages, expired promotions, and pagination links consume bot visits that should target converting category pages, reducing your technical and mobile SEO efficiency by 40 percent or more.

For enterprise websites and large ecommerce platforms, crawl budget becomes a growth bottleneck. Sites with millions of URLs often discover that only 30 percent of their pages get crawled monthly, leaving revenue generating content invisible to potential customers searching for exact match products.

How Does Crawl Budget Work?

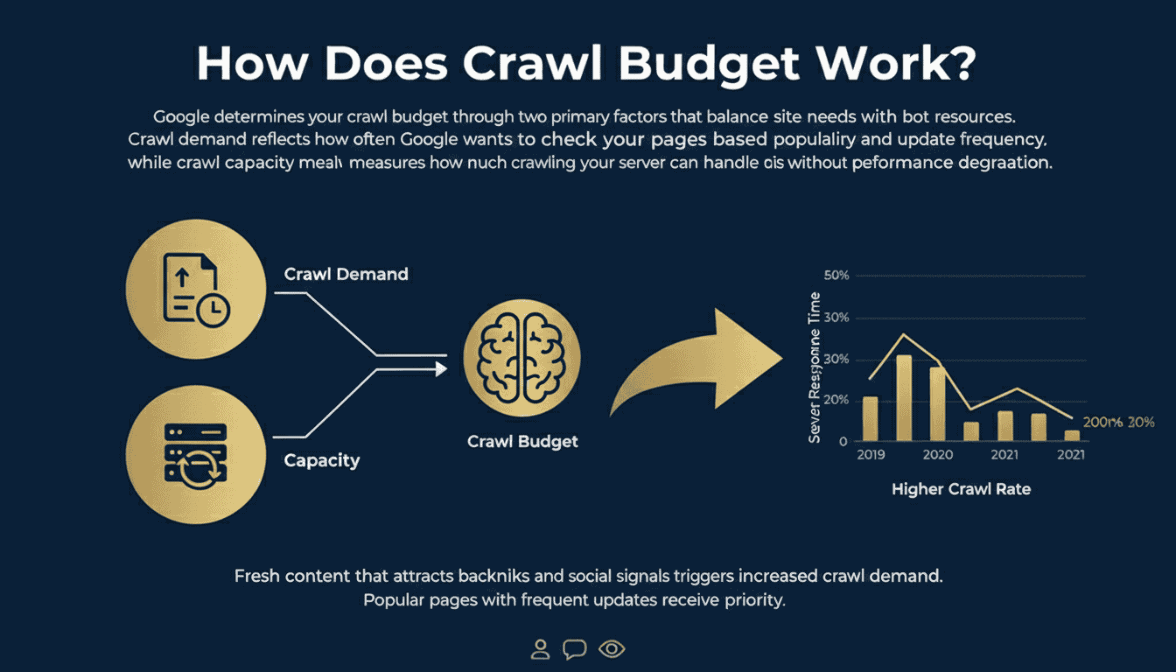

Google determines your crawl budget through two primary factors that balance site needs with bot resources. Crawl demand reflects how often Google wants to check your pages based on popularity and update frequency, while crawl capacity measures how much crawling your server can handle without performance degradation.

When Googlebot requests a page, your server response time affects future crawl rates. Sites responding in under 200 milliseconds earn higher crawl budgets because bots can visit more pages per session without overloading infrastructure or triggering timeout errors.

The system adapts continuously based on behavior signals. If Google discovers that 80 percent of your URLs return duplicate content, the algorithm reduces crawl frequency to avoid wasting resources. Fresh content that attracts backlinks and social signals triggers increased crawl demand for related sections.

Popular pages with frequent updates and strong engagement signals receive priority in crawl schedules. Your homepage and top category pages typically get crawled multiple times daily, while deep archive pages might wait weeks between visits unless you actively signal their importance through internal linking.

Best Practices for Crawl Budget Optimisation

Start by blocking low value pages through robots.txt to prevent bot waste on administrative URLs, search result pages, and filtered views. Ecommerce sites commonly have thousands of faceted navigation combinations that create duplicate content without adding unique value for searchers.

Use your XML sitemap strategically to guide crawlers toward priority pages. Include only indexable URLs that provide user value, removing duplicate variations and outdated content that dilutes crawl focus from pages that drive actual conversions.

Fix broken links and redirect chains immediately because each redirect consumes additional crawl resources. When a bot follows three redirects to reach a final destination, it uses four crawl slots instead of one, multiplying inefficiency across thousands of internal links.

Improve server response time through caching, content delivery networks, and database optimisation. Every 100 millisecond improvement in server response allows Google to crawl approximately 15 percent more pages during each bot session.

Update content regularly on important pages to increase crawl demand. Google prioritises pages that show consistent updates, especially when those changes attract fresh backlinks or social mentions that signal ongoing relevance.

Implement strategic internal linking that creates clear hierarchies and passes crawl equity to deep pages. Orphan pages without internal links rarely get crawled regardless of their quality, while well connected pages receive consistent bot attention.

Common Crawl Budget Mistakes and Misconceptions

Many site owners believe small websites need crawl budget optimisation, but Google explicitly states that sites under 10,000 URLs rarely face crawl limitations. Spending resources optimising crawl budget for a 500 page blog diverts attention from content quality and backlink acquisition.

Blocking pages via robots.txt while keeping them in your sitemap creates conflicting signals that confuse crawlers. Google receives mixed messages about page importance, often choosing to ignore the sitemap directive entirely and respecting the robots.txt block.

Some SEOs assume that more crawls always improve rankings, but crawl frequency doesn’t directly impact position. Google crawls pages to discover content updates, but ranking depends on relevance, authority, and user experience factors independent of crawl rate.

Using noindex tags to manage crawl budget actually wastes more resources than it saves. Googlebot must still crawl noindexed pages to check their status, consuming budget without indexing benefit. Blocking via robots.txt proves more efficient for truly unwanted URLs.

Ignoring mobile crawling creates massive inefficiencies because Google primarily crawls the mobile version of your site. Desktop only optimisations miss the crawl patterns that actually determine indexing behavior for mobile first indexing environments.

Tools and Resources for Crawl Budget Management

Google Search Console provides crawl stats that reveal exactly how Googlebot interacts with your site. The crawl stats report shows daily crawl requests, download sizes, and response times, helping you identify sudden changes that indicate technical problems or content issues.

Screaming Frog SEO Spider crawls your site like Googlebot, revealing duplicate content, broken links, and redirect chains that waste budget. Running monthly crawls with this tool helps you catch issues before they accumulate into major indexing problems.

Log file analysis tools like Botify or Screaming Frog Log Analyser show actual Googlebot behavior on your server. These tools reveal which pages Google crawls most frequently, helping you align your optimisation efforts with bot priorities rather than assumptions.

Server monitoring platforms track response times and identify performance bottlenecks that limit crawl capacity. Tools like New Relic or Datadog help you correlate server slowdowns with reduced crawl rates, making infrastructure problems visible.

Google’s PageSpeed Insights identifies specific performance issues that affect both user experience and crawl efficiency. Fixing issues flagged here improves server response times that directly increase your available crawl budget.

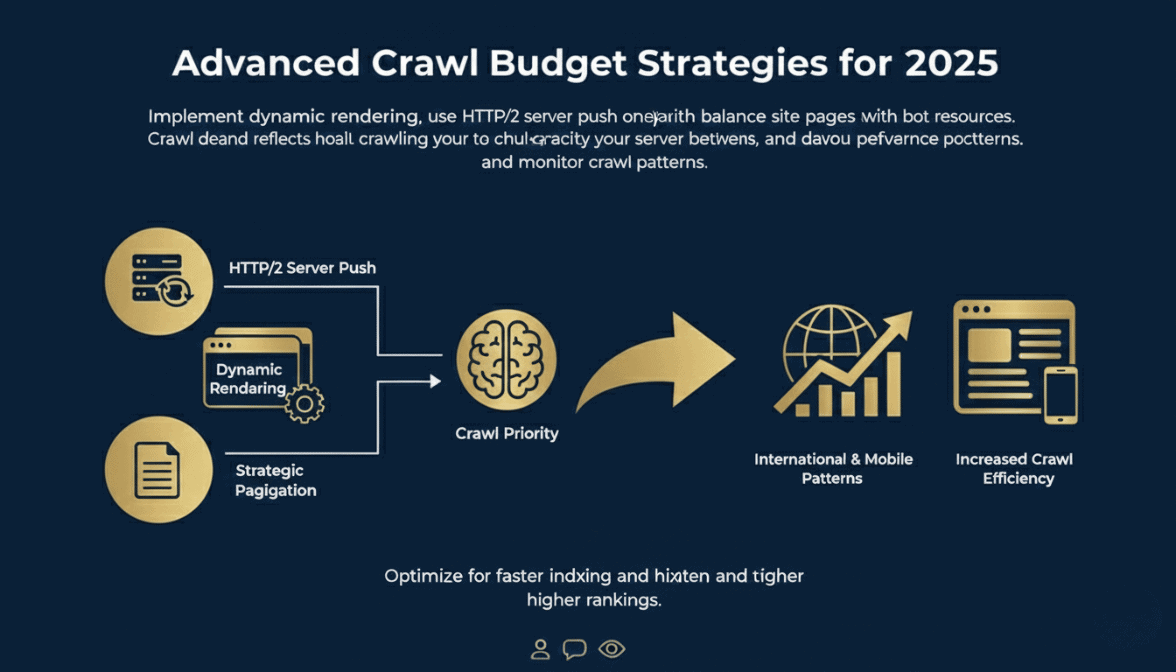

Advanced Crawl Budget Strategies for 2026

Implement dynamic rendering for JavaScript heavy sites to reduce the processing burden on Googlebot. Serving pre rendered HTML to bots while maintaining JavaScript for users cuts crawl time by 60 percent while preserving interactive features.

Use HTTP/2 server push to send critical resources before the bot requests them. This technique reduces round trips required during crawling, allowing Google to process pages faster and crawl more URLs per session.

Create strategic pagination structures that balance crawl depth with user experience. Implementing “view all” pages for important product categories gives Google single page access to complete inventories without crawling hundreds of paginated URLs.

Segment your site into crawl priority zones using subdirectories and clear URL structures. Place high value converting pages in easily discoverable paths while moving supplementary content to deeper hierarchies that receive proportionally lower crawl attention.

Monitor international and mobile crawl patterns separately because Google assigns different crawl budgets to each site version. Hreflang implementations and responsive design choices directly impact how efficiently bots discover and index multilingual content variations, especially when managing technical mobile SEO requirements.

How Crawl Budget Affects Different Site Types

Ecommerce platforms face unique crawl budget challenges due to product variations, filters, and sorting options that create exponential URL combinations. A single product offered in five colors and four sizes generates 20 URLs that often contain duplicate content.

News and media sites need aggressive crawl optimisation because freshness directly impacts rankings. Articles published during peak news cycles must reach Google’s index within minutes to capture time sensitive search traffic before competitors.

Small business websites with under 500 pages rarely encounter crawl budget limitations. These sites benefit more from focusing on content quality, local citations, and customer reviews rather than technical crawl optimisations designed for enterprise scale.

Marketplace and directory sites with user generated content face crawl dilution from low quality pages. Profile pages with minimal information and inactive listings consume budget without providing indexable value, requiring aggressive quality filters and robots.txt restrictions.

SaaS and software sites with extensive documentation often create crawl inefficiencies through versioned content. Maintaining separate documentation for each product version multiplies page counts while fragmenting authority across near duplicate resources.

Measuring Crawl Budget Success

Track your indexation rate by comparing submitted sitemap URLs against indexed pages in Google Search Console. A healthy site should see 85 percent or higher indexation for submitted pages, with delays under seven days for new content.

Monitor crawl frequency changes for priority pages using log file analysis. Successful optimisation increases crawl rates on converting pages while reducing wasted crawls on administrative and duplicate URLs.

Measure server response time trends to ensure infrastructure keeps pace with content growth. Degrading response times signal capacity problems that will eventually throttle your crawl budget regardless of other optimisations.

Analyse organic traffic growth for recently published content. Faster indexing from improved crawl budget should correlate with quicker traffic acquisition for time sensitive content and new product launches.

Review Google Search Console error reports for crawl anomalies. Sudden spikes in 404 errors or server errors indicate problems that are actively wasting crawl budget on broken resources.

Final Words

Most SaaS, B2B, and agency teams treat crawl budget like an afterthought: hope Google indexes important pages, ignore wasted crawls, and wonder why rankings aren’t predictable. At Drip Ranks, we knew there had to be a better way. So we built a system, not a service.

Forensic audits uncover your highest ROI opportunities, identifying exactly where crawl inefficiencies are holding your site back. Intent-mapped strategies ensure bots prioritise your most valuable pages, while scalable execution multiplies results without increasing headcount. The difference? Your SEO becomes measurable, repeatable, and revenue-focused, not a black box that relies on guesswork.

Drip Ranks specialises in technical SEO audits that reclaim wasted crawl budget and accelerate indexing for priority pages. Our team uses enterprise-grade log file analysis and custom crawl simulations to build optimisation roadmaps that deliver faster rankings. Contact us today for a comprehensive crawl budget audit and start capturing your full search potential.